Is ubiquity of artificial intelligence breaking the fast cheap good paradox? Is this the core of what AI is changing?

by Arne Hansen

These slides were presented at the Data-driven Smart Building Symposium held by IEA/EBC annex 81 at the Tyree Centre, UNSW, Sydney Australia.

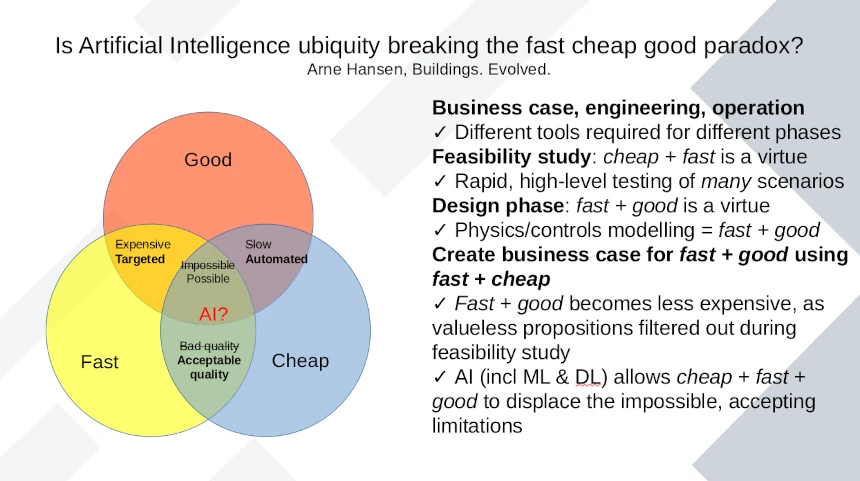

Almost everyone has encountered the Venn diagram of the fast cheap good paradox.

And, until recently, I’d posit this was the case. But can we really say the world hasn’t changed in light of - not only Open AI - but the ubiquity and scale of compute available at record low prices. Artificial Intelligence (including Machine Learning and Deep Learning) have been around since the 1960s, but until the ubiquity of compute, has largely been an unexplored and theoretical space.

Traditional approaches call for the fast and good. This is done without the benefit of a meta analysis as to financial, environmental or social benefit.

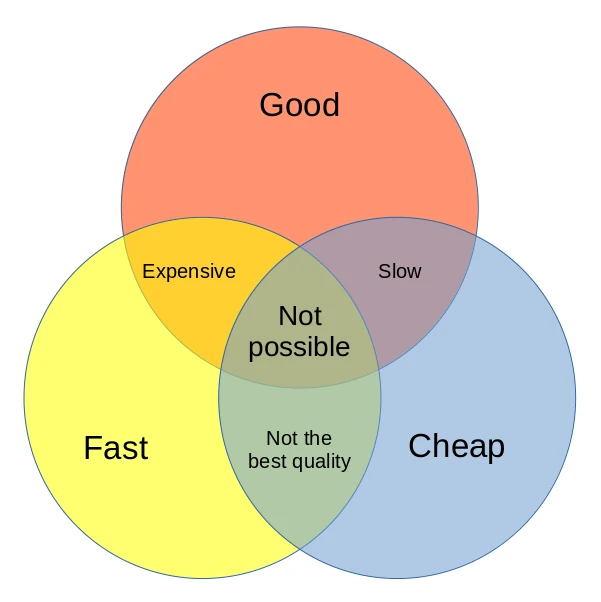

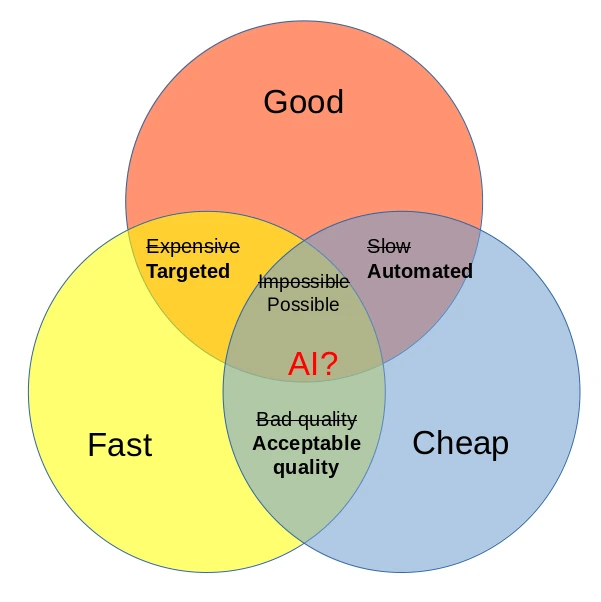

Here is where AI can step in.

Imagine that we can make fast and cheap of an acceptable quality: rapidly working up what-if scenarios using a data-driven approach, rather than using traditional precedence data and assumptions.

So. If AI can help us make fast and cheap good, which it most certainly is when looking at ChatGPT, then we are shifting the dial, and breaking the paradox.

Therefore, let’s reconsider what our Venn diagram looks like in this context.

Levels Of Detail

We require different tooling as we different levels of detail are required during project inception, design, construction and operation.

In Feasibility and Concept Design

Fast + cheap is a Virtue

In this phase of works, we want rapid, high-level testing of many scenarios.

Data-driven applications = fast + cheap

But, In Design phase

Fast + good is a virtue

Physics and controls modelling = fast + good

Create business case for fast + good using fast + cheap Fast + good becomes less expensive, as valueless propositions filtered out during feasibility study AI (incl ML & DL) allows cheap + fast + good to displace the impossible, accepting limitations

The full slide